Exporting and Importing Process Run Time Data Using the CLI

You can move process run time data from one INUBIT Process Engine to another. Optionally, you can filter the data to be moved using filter expressions.

|

All process commands described below are available in both the interactive mode (refer to Interactive CLI Mode) and the script mode (refer to Script Mode of the CLI). |

Overview

On the source system, process run time data are exported.

Run time data are written into an archive.

Exported processes are stopped on the source system.

If the run time data of all processes to be exported have been exported an operationId is generated and displayed.

This operationId identifies the entire process and is necessary for the following steps.

This operationId must be available for further actions.

The archive file is stored on the Process Engine with which the CLI is connected to. After the export is finished successfully, the path to the archive file is displayed on the console (refer to Exporting the Source System’s Run Time Data Using CLI).

The archive is made available via path for the CLI that has been started for importing.

The run time data contained in the archive are imported on the destination system. Now, the same processes exist on both the source system and the destination system but they are not executed.

If all run time data have been imported correctly, the corresponding processes are resumed using the `operationId `on the destination system.

If all processes have been resumed successfully on the destination system, the exported processes are deleted on the source system.

Otherwise, the exported processes are resumed using the operationId on the source system.

|

The audit log entries will not be exported. |

Prerequisites

-

You have started the CLI as administrator.

-

The modeling data (workflows and modules) as well as the user structure and the user group structure must be identical on both the source system and the destination system.

-

If you want to filter run time data, that is, to export data of a certain tenant, both the Monitoring must be configured in the Workbench and the workflows must be configured accordingly, refer to Filtering the Run Time Data to be Exported.

When moving processes the following steps are executed:

-

Exporting run time data of processes is initiated on the source system, refer to Exporting the Source System Run Time Data Using CLI.

-

Processes in state PROCESSING are executed until they will have been finished successfully or reached one of the following states:

-

WAITING

-

SUSPEND

-

ERROR

-

RETRY

-

-

Processes in state QUEUED are not executed by the scheduler and are not exported.

If a sub-workflow is in the QUEUED state, it is moved anyway if the calling process is in the PROCESSING state and the sub-workflow has the same process ID.

-

Processes, whose run time data are marked for exporting are blocked, and they are no longer executed.

-

-

Run time data of processes to be exported from the source system are written to a ZIP archive.

The archive is stored into the directory

<inubit-installdir>/inubit/server/ibis_root/ibis_data/backupon the Process Engine the CLI command was connected with.If the CLI command was connected with a load balancer, the executing node is ambiguous. Hence, the hostname of the Process Engine the archive has been created on is returned. It is strongly recommended to connect to a node.

-

The ZIP archive must be transferred to a system that can be reached using a path.

-

The ZIP archive must be imported on the destination system, refer to Importing Run Time Data Using CLI.

-

The execution of the processes whose run time data have been imported successfully on destination system can be resumed, refer to Resume Blocked Processes Using CLI.

-

If all processes have been resumed successfully on the destination system must be removed from the source system, refer to Deleting Blocked Processes Using CLI. Otherwise, the processes can be resumed on the source system, refer to Resume Blocked Processes Using CLI.

Filtering the Run Time Data to be Exported

Filtering by User-defined Data

To specify the run time data to be exported, e.g. the data of a certain tenant, you can use the user defined columns in the Queue Manager and the variables mapping.

You can specify multiple columns and combine them to a complex filter condition.

Refer to

Defining the Filter Name

Proceed as follows

-

Open the tab Administration > General Settings > Logging > User defined columns.

-

Click in the Value field in the Queue Manager: user defined column 1 line.

You can use the other user defined columns to define filter names, too.

-

Enter the filter name, e.g. tenantID.

-

Click the Save button

.

.

→ The user defined column 1 is named to the given value.

|

To define more than one filter repeat the steps 2, 3, and 4. How to combine multiple filters, refer to Command Reference: Exporting and Importing Run Time Data. |

Displaying the Filter Name Column

Proceed as follows

-

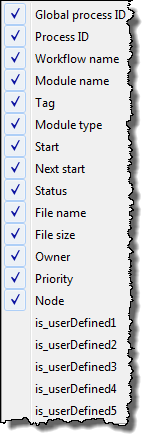

Open the tab Monitoring > Queue Manager.

-

Right-click the table heading.

-

Click the item(s), e.g. is_userDefined1 if it is not yet checked.

→ The user defined column 1 is displayed as a column in the Queue Manager.

Setting the Filter Name Value

You must set the filter name value using the variables mapping.

For each Technical Workflow to be exported, you have to define a variable named identically to the value you have specified for the user-defined column.

Proceed as follows

-

Open the Designer > Server tab in the INUBIT Workbench.

-

Open the Technical Workflow whose run time data are to be exported for editing.

-

Open the Variables docking window right of the workspace.

-

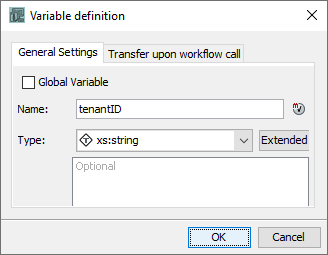

Click the New button

.

. -

The variable name must be identical to the value you have specified for the user-defined column before.

-

Select the

xs:stringtype, if necessary.

-

Add a comment, if necessary.

-

Click OK to save the new variable.

-

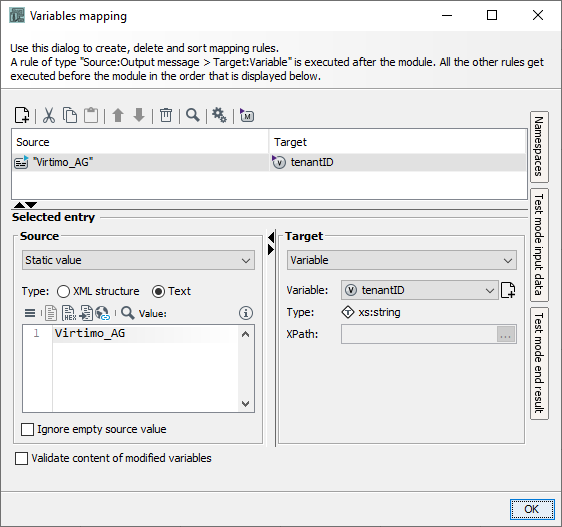

Open the Variables Mapping dialog for the module that needs the information to be moved using the context menu item Edit variables mapping.

Which module needs this information depends on your workflow. If you specify a filter to export only certain run time data, e.g. data of a certain tenant, the process data are moved only if the variable is set to the expected value, e.g. the tenant name.

If the variable is not set for a process, e.g. because it is queued, it will be moved only if it has the same process ID as another process for which the variable is set, e.g. it is a sub process of the process for which the variable is set.

-

For example, select Static value for the source and set the value according to the variable.

Only alphanumeric characters are allowed in the filter name value, except for defining a path to a csv file containing process IDs.

-

Select the variable you have defined to specify certain run time data to be exported.

-

Click OK to save the variable mapping.

-

Publish the Workflow.

→ The variables mapping is assigned to the module. Now, it has a V icon in the bottom left corner.

Filtering by ProcessIDs

You can reduce the runtime data to be exported by just exporting data of certain ProcessIDs.

Prerequisites

You know the ProcessIDs for the runtime data to be exported.

Proceed as follows

-

Create a ProcessIDs file (text file) containing the comma-separated ProcessIDs the runtime data shall be exported for.

Example content of a ProcessIDs file:

100, 101, 102, 103, 104, 105, 106, 107 -

Start the runtime data export with the option

--filterProcessIds <pathToProcessIdFile>withpathToProcessIdFileas complete path to the created ProcessIDs file (refer to Exporting the Source System Run Time Data Using CLI).

→ The system

-

reads the text file with the ProcessIDs,

-

blocks the processes contained in the file,

-

stores the runtime data of these processes into the export archive,

-

returns the OperationID.

For all nonexistent processes from the ProcessIDs file a warning appears in the Trace Log.

Exporting the Source System Run Time Data Using CLI

Prerequisites

The user has the Configuration right.

Call up

-

Interactive mode

startcli [-u <user account>] [-p <password>] ... process export {<absolute path>|<archive file name>} [--filterExpression <expression>] [--filterProcessIds <pathToProcessIdFile>] -

Script mode

startcli [-u <user account>] [-p <password>] --execCommand "process export <archive filename> [--filterExpression <expression>] [--filterProcessIds <pathToProcessIdFile>]"

Parameters

-

absolute pathabsolute archive file path

-

archive file nameArchive file name. The archive file is written to the

<inubit-installdir>/inubit/server/ibis_root/ibis_data/backupdirectory. -

expressionFilter expression, refer to Command Reference: Exporting and Importing Run Time Data

The operationId and file path will be returned after the command has been finished.

The export archive has the same structure as the backup archive.

Example

-

Interactive mode

process export export_2015_08_04.zip --filterExpression 'tenantID=Virtimo AND location in (Berlin, Hamburg)' -

Script mode

startcli -u jh -p inubit --execCommand "process export export_2015_08_04.zip --filterExpression 'tenantID=Virtimo AND location in (Berlin, Hamburg)'"

Returned text

If the runtime data have been exported successfully, the following information are returned.

Runtime data export was successful!

The export file is located at: C:\inubit\server\Tomcat\bin\..\..\ibis_root\ibis_data\backup\export_2015_08_04.zip

Operation ID is: c85cb4a7-36e3-4b97-a585-105b6c056b4aList Blocked Processes Using CLI

Prerequisites

The user has the Configuration right.

Call up

process listBlocked <operationId>

Use this command to check the processes that have been exported or imported and blocked for the given operationId.

Importing Run Time Data Using CLI

Prerequisites

-

For the import CLI, the archive is available via path.

-

The user has the Configuration right.

Call up

process import <archive file path>

After the import the processes are not started automatically.

They must be started using the process resume <operationId> command, refer to Resume Blocked Processes Using CLI.

After the imported processes are started on the destination system they must be deleted on the source system using the process deleteBlocked <operationId> command, refer to Deleting Blocked Processes Using CLI.

Otherwise, resume the blocked processes on the source system, refer to Resume Blocked Processes Using CLI.

Resume Blocked Processes Using CLI

Prerequisites

The user has the Configuration right.

Call up

process resume <operationId>

Use this command to resume the blocked processes with the given operationId on the destination system or on the source system if resuming the processes on the destination has failed.

Deleting Blocked Processes Using CLI

Prerequisites

-

All processes are resumed successfully using a given

operationIdon the destination system. -

The user has the Configuration right.

Call up

process deleteBlocked <operationId>

Use this command to delete the blocked processes with the given operationId on the source system or on the destination system if resuming the processes on the destination has failed.

Command Reference: Exporting and Importing Run Time Data

Call up

process export {<absolute path>|<file name>}

[--excludeModel]

[--filterExpression <expression>|

--filterProcessIds {<absolute path>|<file name>}]

import <file path>

deleteBlocked <operation ID>

listBlocked <operation ID>

resume <operationId>To display the usage, you can use one of the following commands:

process -h

process --help|

All process commands are also available in script mode, refer to Script Mode of the CLI. |

Command options

| Option | Description |

|---|---|

|

To export run time data to the given absolute archive file |

|

To export run time data to the given file name to the |

|

Model data like diagrams and modules are not exported. |

|

Optionally: Filtering run time data to be exported The filter expression must be single-quoted.

|

|

To import run time data from the given archive |

|

To delete blocked processes and run time data using a given |

|

To List blocked processes using a given operationId |

|

To resume blocked processes using a given operationId |

|

To validate the runtime data export zip archive |

Example

process export myExport.zip --filterExpression 'location in (Bangalore, Berlin) AND countryName inFile /tmp/countryName.txt'