Logging

Code execution information, warnings and errors are written to log files for troubleshooting purposes. The following describes where these can be found, how they can be configured and how the log level can be set.

Configuration file

Karaf uses Apache Log4j 2 for logging.

Its properties-based configuration file is located at [karaf]/etc/org.ops4j.pax.logging.cfg.

Logging files

The logging files are written to the directory [karaf]/data/log.

This directory is defined in the aforementioned configuration file via the Java system property ${karaf.log}.

Several logging files are created by default. The following two are BPC-specific.

| Logger | Level | File |

|---|---|---|

authentication |

WARN |

authentication.log |

en.virtimo.bpc |

WARN |

bpc.log |

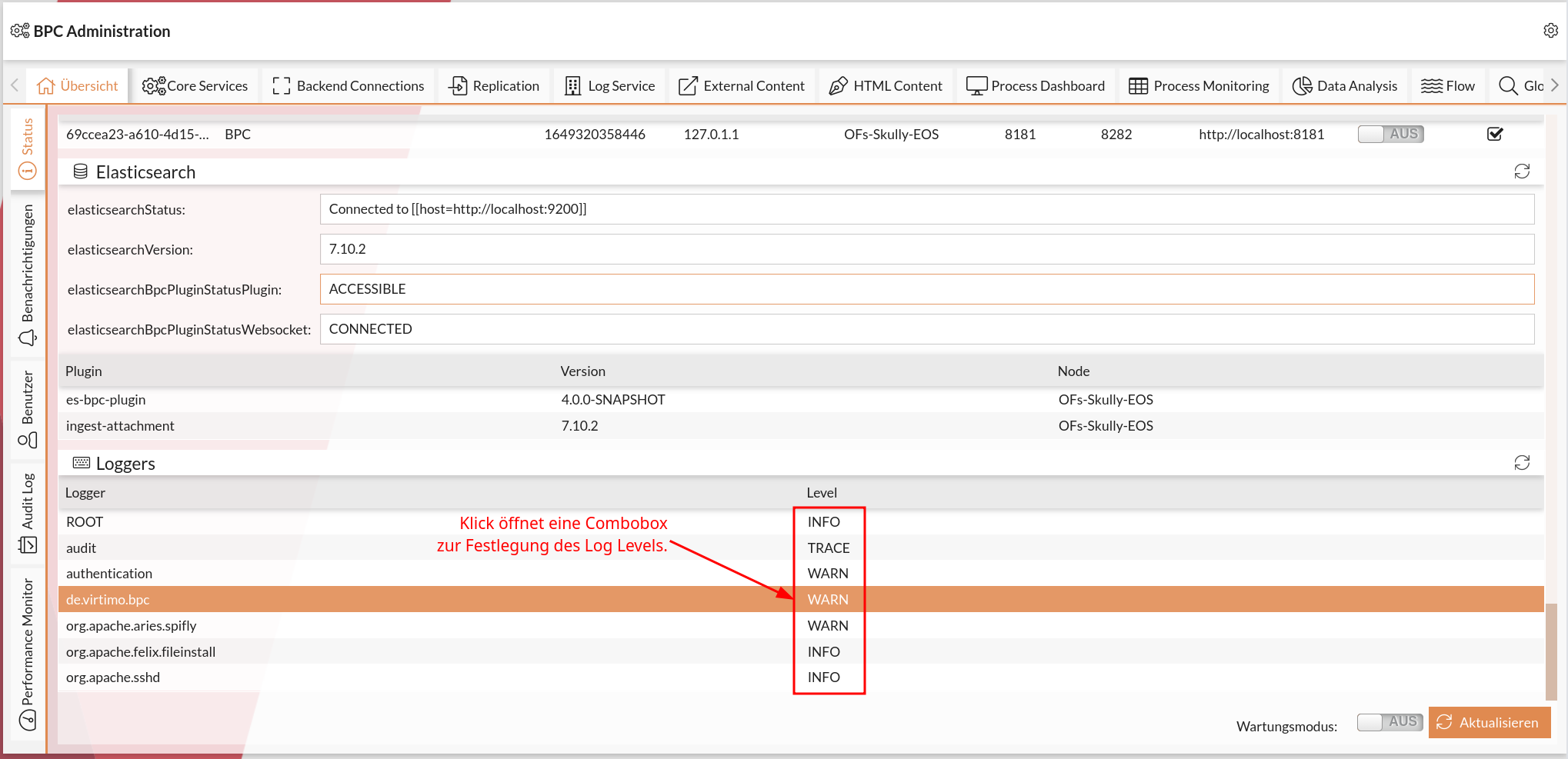

Set log level

The level of detail of the data to be logged can be defined per logger per log level.

The possible log levels are TRACE, DEBUG, INFO, WARN, ERROR, FATAL, OFF. From left to right, less and less data is logged until none at all.

Using the Karaf shell command

The configured loggers and their log levels can be displayed using the Karaf shell command log:list.

virtimo@bpc()> log:list

Logger │ Level

─────────────────────────────┼──────

ROOT │ INFO

audit │ TRACE

authentication │ WARN

de.virtimo.bpc │ WARN

org.apache.aries.spifly │ WARN

org.apache.felix.fileinstall │ INFO

org.apache.sshd │ INFOThe level of a logger can be defined at runtime using the command log:set <LEVEL> <Logger>.

In the following example, the logger de.virtimo.bpc is changed from the log level WARN to INFO.

virtimo@bpc()> log:set INFO de.virtimo.bpcCreate new loggers

For targeted troubleshooting or for specific development purposes, it can be useful to define your own loggers. These can use individual log levels and log files to store relevant information separately from other log data.

Create logger via configuration file

-

Open the file

org.ops4j.pax.logging.cfgin the directory[karaf]/etc. -

Add a new section in which you define the name of the logger as well as its log level and appender. The following example logger records all messages of the H2 database package

h2database:

# H2-Datenbank-Logger

log4j2.logger.h2database.name = h2database (1)

log4j2.logger.h2database.level = DEBUG (2)

log4j2.logger.h2database.additivity = false (3)

log4j2.logger.h2database.appenderRef.H2dbRollingFile.ref = H2dbRollingFile (4)| 1 | The name of the logger, in this case h2database, which is used for H2 database logs. |

| 2 | The desired log level (e.g. TRACE, DEBUG, INFO, etc.). |

| 3 | Set additivity to false to prevent log messages from being forwarded to other loggers. |

| 4 | Here you specify which appender should write the logs of the new logger. In this example, H2dbRollingFile is used as the appender, which must also be defined in the configuration file. |

|

Be sure to observe the documentation of the Java package for which you are creating the logger. |

-

Add a new section in which you specify the appender. The following example appender saves all H2 database logs in the file

h2.logand rotates the log files as soon as they reach 20 MB.

# H2 Appender

log4j2.appender.H2database.type = RollingRandomAccessFile (1)

log4j2.appender.H2database.name = H2dbRollingFile (2)

log4j2.appender.H2database.fileName = ${karaf.log}/h2.log (3)

log4j2.appender.H2database.filePattern = ${karaf.log}/h2.log.%i (4)

log4j2.appender.H2database.append = true (5)

log4j2.appender.H2database.layout.type = PatternLayout (6)

log4j2.appender.H2database.layout.pattern = ${log4j2.maskpasswords.pattern} (7)

log4j2.appender.H2database.policies.type = Policies (8)

log4j2.appender.H2database.policies.size.type = SizeBasedTriggeringPolicy (9)

log4j2.appender.H2database.policies.size.size = 20MB (10)

log4j2.appender.H2database.strategy.type = DefaultRolloverStrategy (11)

log4j2.appender.H2database.strategy.max = 5 (12)| 1 | The type is set to RollingRandomAccessFile to create a log file that rotates when it reaches a certain size. |

| 2 | The name of the appender, here H2dbRollingFile, must be referenced in the logger as appenderRef. |

| 3 | The fileName specifies the path and name of the main log file, in this case h2.log. |

| 4 | filePattern specifies the rotation pattern. For a new log file, the pattern jdbc.log.%i is used, where %i stands for the number of the file. |

| 5 | append = true ensures that new logs are appended to the existing file instead of overwriting it. |

| 6 | The layout.type is set to PatternLayout to enable a customizable pattern for log output. |

| 7 | layout.pattern uses a predefined pattern for output formatting that masks passwords (by ${log4j2.maskpasswords.pattern}). |

| 8 | policies.type = Policies defines a collection of rules that determine when a log rotation is triggered. |

| 9 | policies.size.type = SizeBasedTriggeringPolicy means that the rotation is triggered based on the file size. |

| 10 | policies.size.size = 20MB specifies that rotation occurs as soon as the file reaches 20 MB. |

| 11 | strategy.type = DefaultRolloverStrategy specifies the default strategy for rotation behavior. |

| 12 | strategy.max = 5 limits the number of stored, rotated files to 5, with the oldest file being deleted when the limit is reached. |

-

Logger changes are recorded at runtime. It is not necessary to restart Karaf after you have saved the changes in

org.ops4j.pax.logging.cfg.

Create logger via shell command

It is possible to create and configure a new logger at runtime via the Karaf shell. This is particularly useful if you want to quickly activate additional logs for a specific package or class without having to edit the configuration file manually.

To create a logger via the shell, use the following steps:

-

Open shell: Log in to the Karaf shell, see also Karaf access

-

Add logger: Use the command

log:setto create a new logger with the desired log level. Here is an example of adding a logger for the packageh2databasewith the log level DEBUG:log:set DEBUG h2database -

Display active loggers: To ensure that the logger has been successfully added, you can list all configured loggers with the following command:

log:list

|

If you have created the logger via shell command, the logs are written to the root logger (here |